|

| Random Octet Truss Array (on shapeways) |

Wednesday, December 19, 2012

Octet Truss for Topology Optimization

Monday, December 17, 2012

Interesting Developments in the Numerical Python World

Hello all,

There is a lot happening in my life right now and I am spread quite thin among the various projects that I take an interest in. In particular, I am thrilled to publicly announce on this list that Continuum Analytics has received DARPA funding (to the tune of at least $3 million) for Blaze, Numba, and Bokeh which we are writing to take NumPy, SciPy, and visualization into the domain of very large data sets. This is part of the XDATA program, and I will be taking an active role in it. You can read more about Blaze here: http://blaze.pydata.org. You can read more about XDATA here: http://www.darpa.mil/Our_Work/I2O/Programs/XDATA.aspx

I personally think Blaze is the future of array-oriented computing in Python...

Passing the torch of NumPy and moving on to Blaze

Thursday, December 13, 2012

Open Source Topology Optimization for 3D Printing

|

| Rough Hex Output & Smooth Surface Reconstruction for Dogleg |

Sunday, November 4, 2012

Fedora BRL-CAD Compile Notes

I have not had good luck with the Boolean operations in FreeCAD as I had hoped (seems to be a problem with the underlying OpenCascade libraries) so I am compiling BRL-CAD.

Sunday, October 21, 2012

Monday, October 8, 2012

OpenFoam on Google Compute Engine

Sunday, October 7, 2012

Falcon 9 Flight 4, CRS-1

Monday, September 17, 2012

DMLS Wind Tunnel Models

Additive manufacturing, sometimes called direct digital fabrication or rapid prototyping, has been in the news quite a bit lately. I wrote a post recently for Dayton Diode about the many additive manufacturing options available for fabricating functional parts or tooling in response to comments on a piece in the Economist, and commented recently on Armed and Dangerous in a discussion about 3D printed handguns. There are just so many exciting processes and materials available for direct digital parts production today. Some of the work I've been doing recently to qualify one particular additive process for fabricating high-speed wind-tunnel models (abstract) was accepted for presentation at next year's Aerospace Sciences Meeting.

We used Direct Metal Laser Sintering (DMLS) to fabricate some proof-of-concept models in 17-4 stainless steel. DMLS is a trade name for the selective laser melting process developed by EOS. The neat thing about DMLS (and additive processes in general) is that complicated internal features like pressure tap lines can be printed in a single-piece model. Being able to reduce the parts count on a model to one while incorporating 20 or so instrumentation lines (limited only by the base area of our particular model) is really great, because one part is much faster and less expensive to design and fabricate than a multi-component model with complicated internal plumbing. The folks down at AEDC are also exploring the use of DMLS to fabricate tunnel force and moment balances for much the same reason we like it for models and others like it for injection mold tooling: intricate internal passages, in their case, for instrumentation cooling and wiring.

Monday, September 10, 2012

FreeCAD on Fedora

|

| UpAerospace 10 inch Payload Can (PTS10) |

There seems to be a great deal of difficulty (brlcad, opencascade) in getting any of the open source CAD programs incorporated into the Linux distributions that maintain high standards on acceptable license terms and packaging guidelines (Fedora). Luckily, for a pragmatic guy like me I can now install FreeCAD from the RPMFusion non-free repos.

yum install freecad

I'm excited to see if I can get better Boolean operation results (less manual mesh fixing) with FreeCAD for 3D printing than I've had with Blender.

Saturday, September 8, 2012

2011 AIAA Day-Cin AiS Entries

|

| Stripes |

|

| Breakdown |

Sunday, September 2, 2012

Human Powered Helicopter Flight Testing

Flight test is a tough business. Even more so when every second airborne requires near max physical exertion!

I hope these students get their structure fixed and get back in the air soon. This is some really great work. Their vehicle only weighs 71 lbs.

Check out their record setting human-powered helicopter endurance flight.

Thursday, August 30, 2012

RIP John Hunter

Fernando Perez fperez.net@gmail.com via scipy.org

10:59 PM (18 hours ago) to SciPy, SciPy, numfocus

Dear friends and colleagues,

I am terribly saddened to report that yesterday, August 28 2012 at 10am, John D. Hunter died from complications arising from cancer treatment at the University of Chicago hospital, after a brief but intense battle with this terrible illness. John is survived by his wife Miriam, his three daughters Rahel, Ava and Clara, his sisters Layne and Mary, and his mother Sarah.

Note: If you decide not to read any further (I know this is a long message), please go to this page for some important information about how you can thank John for everything he gave in a decade of generous contributions to the Python and scientific communities: http://numfocus.org/johnhunter.

Saturday, August 18, 2012

Experimental Design Criteria

Wednesday, August 15, 2012

Saturday, August 11, 2012

Validating the Prediction of Unobserved Quantities

Here's the abstract:

In predictive science, computational models are used to make predictions regarding the response of complex systems. Generally, there is no observational data for the predicted quantities (the quantities of interest or QoIs) prior to the computation, since otherwise predictions would not be necessary. Further, to maximize the utility of the predictions it is necessary to assess their reliability|i.e., to provide a quantitative characterization of the discrepancies between the prediction and the real world. Two aspects of this reliability assessment are judging the credibility of the prediction process and characterizing the uncertainty in the predicted quantities. These processes are commonly referred to as validation and uncertainty quantification (VUQ), and they are intimately linked. In typical VUQ approaches, model outputs for observed quantities are compared to experimental observations to test for consistency. While this consistency is necessary, it is not sufficient for extrapolative predictions because, by itself, it only ensures that the model can predict the observed quantities in the observed scenarios. Indeed, the fundamental challenge of predictive science is to make credible predictions with quantified uncertainties, despite the fact that the predictions are extrapolative. At the PECOS Center, a broadly applicable approach to VUQ for prediction of unobserved quantities has evolved. The approach incorporates stochastic modeling, calibration, validation, and predictive assessment phases where uncertainty representations are built, informed, and tested. This process is the subject of the current report, as well as several research issues that need to be addressed to make it applicable in practical problems.

Saturday, July 28, 2012

Mixed Effects for Fusion

Monday, July 23, 2012

Convergence for Falkner-Skan Solutions

There are some things about the paper that are not novel, and others that seem to be nonsense. It is well-known that there can be multiple solutions at given parameter values (non-uniqueness) for this equation, see White. There is the odd claim that "the flow starts to create shock waves in the medium [above the critical wedge angle], which is a representation of chaotic behavior in the flow field." Weak solutions (solutions with discontinuities/shocks) and chaotic dynamics are two different things. They use the fact that the method they choose does not converge when two solutions are possible as evidence of chaotic dynamics. Perhaps the iterates really do exhibit chaos, but this is purely an artifact of the method (i.e. there is no physical time in this problem, only the pseudo-time of the iterative scheme). By using a different approach you will get different "dynamics", and with proper choice of method, can get convergence (spectral even!) to any of the multiple solutions depending on what initial condition you give your iterative scheme. They introduce a parameter, \(\eta_{\infty}\), for the finite value of the independent variable at "infinity" (i.e. the domain is truncated). There is nothing wrong with this (actually it's a commonly used approach for this problem), but it is not a good idea to solve for this parameter as well as the shear at the wall in your Newton iteration. A more careful approach of mapping the boundary point "to infinity" as the grid resolution is increased (following one of Boyd's suggested mappings) removes the need to solve for this parameter, and gives spectral convergence for this problem even in the presence of non-uniqueness and the not uncommon vexation of a boundary condition defined at infinity (all of external aerodynamics has this helpful feature).

Sunday, July 22, 2012

VV&UQ for Historic Masonry Structures

Abstract: This publication focuses on the Verification and Validation (V&V) of numerical models for establishing confidence in model predictions, and demonstrates the complete process through a case study application completed on the Washington National Cathedral masonry vaults. The goal herein is to understand where modeling errors and uncertainty originate from, and obtain model predictions that are statistically consistent with their respective measurements. The approach presented in this manuscript is comprehensive, as it considers all major sources of errors and uncertainty that originate from numerical solutions of differential equations (numerical uncertainty), imprecise model input parameter values (parameter uncertainty), incomplete definitions of underlying physics due to assumptions and idealizations (bias error) and variability in measurements (experimental uncertainty). The experimental evidence necessary for reducing the uncertainty in model predictions is obtained through in situ vibration measurements conducted on the masonry vaults of Washington National Cathedral. By deploying the prescribed method, uncertainty in model predictions is reduced by approximately two thirds.

Highlights:

- Developed a finite element model of Washington National Cathedral masonry vaults.

- Carried out code and solution verification to address numerical uncertainties.

- Conducted in situ vibration experiments to identify modal parameters of the vaults.

- Calibrated and validated model to mitigate parameter uncertainty and systematic bias.

- Demonstrated a two thirds reduction in the prediction uncertainty through V&V.

I haven't read the full-text yet, but it looks like a coherent (Bayesian) and pragmatic approach to the problem.

Saturday, July 21, 2012

Rocket Risk

If we assume that each launch has the same probability of success, then these are simple risk calculations to make, e.g. see these slides. The posterior probability of success, \(\theta\), is

\[ p(\theta | r, n) = \mathrm{Beta}(\alpha + r, \beta + n - r) \]

where \(r\) is the number of successes, \(n\) is the number of trials, and \(\alpha\) and \(\beta\) are parameters of the Beta distribution prior. What values of parameters should we choose for the prior? I like \(\alpha=\beta=1\), you could probably make a case for anything consistent with \(\alpha+\beta-2=0\). Many people say that risk = probability * consequence. I don't know what the consequences are in this case, and under that approach NASA's chart doesn't make any sense (you could have a low risk with a high probability of failing to launch an inconsequential payload), so I'll stick to just the probabilities of launch success, and leave worrying about the consequences to others.

Since NASA specifies a number of successes in a row (consecutive) then there is already an indication that assuming the trials independent and identically distributed (i.i.d.) is unrealistic. If your expensive rocket blows up, you usually do your best to find out why and fix the cause of failure. That way on the next launch your rocket has a higher probability of success than it previously did.

Saturday, July 7, 2012

DARPA's Silver Birds

Eugen Sänger, father of the hypersonic boost-glide global bomber concept, may well have been prescient when he wrote, "Nevertheless, my silver birds will fly!"

Wednesday, July 4, 2012

Experimental Designs with Orthogonal Basis

Monday, July 2, 2012

HIFiRE 2 Videos

Multiple high-speed views:

Additional coverage on Parabolic Arc.

Saturday, June 30, 2012

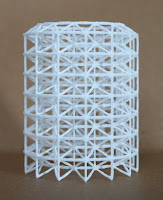

3D Printed Isogrid and Octet Truss

|

| Isogrid Cylinder |

|

| Octet Truss Cylinder |

|

| Octet Truss with Panels |

Update: I tried out the cloud.netfabb.com STL fixing service. It seems to work automagically. They also have a basic version of their software that is free (but not Free).

Update: Some nice isogrid parts, FDM ABS by Fabbr.

Close-up showing the I-beam cross-section.

Saturday, June 23, 2012

Notre Dame V&V Workshop Notes

There are a couple highlights from the workshop that I'll mention before dumping the chronological notes.

James Kamm gave a really great presentation on a variety of exact solutions for 1-D Euler equations. He covered the well known shock tube solutions that you'd find in a good text on Riemann Solvers. Plus a whole lot more. Thomas Zang presented work on a NASA standard for verification and validation that grew out of the fatal Columbia mishap. The focus is not so much proscribing what a user of modeling and simulation will do to accomplish V&V, but requiring that what is done is clearly documented. If nothing is done then the documentation just requires a clear statement that nothing was done for that aspect of verification, validation, or uncertainty quantification. I like this approach because it's impossible for a standards writer to know every problem well enough to proscribe the right approach, but requiring someone to come out and put in writing "nothing was done" often means they'll go do at least something that's appropriate for their particular problem.

I think that in the area of Validation I'm philosophically closest to Bob Moser who seems to be a good Bayesian (slides here). Bill Oberkampf (who, along with Chris Roy, recently wrote a V&V book) did some pretty unconvincing hand-waving to avoid biting the bullet and taking a Bayesian approach to validation, which he (and plenty of other folks at the workshop) view as too subjective. I had a more recent chance to talk with Chris Roy about their proposed area validation metric (which is in some ASME standards), and the ad-hoc, subjective nature of the multiplier for their distribution location shifts seems a lot more treacherous to me than specifying a prior. The fact that they use frequentist distributional arguments to justify a non-distributional fudge factor (which changes based on how the analyst feels about the consequences of the decision; sometimes it's 2, but for really important decisions maybe you should use 3) doesn't help them make the case that they are successfully avoiding "too much subjectivity". Of course, subjectivity is unavoidable in decision making. There are two options. The subjective parts of decision support can be explicitly addressed in a coherent fashion, or they can be pretended away by an expanding multitude of ad-hoceries.

I appreciated the way Patrick Roache wrapped up the workshop, “decisions will continue to be made on the basis of expert opinion and circumstantial evidence, but Bill [Oberkampf] and I just don’t think that deserves the dignity of the term validation.” In product development we’ll often be faced with acting to accept risk based on un-validated predictions. In fact, that could be one operational definition of experimentation. Since subjectivity is inescapable, I resort to pragmatism. What is useful? It is not useful to say “validated models are good” or “unvalidated models are bad”. It is more useful to recognize validation activities as signals to the decision maker about how much risk they are accepting when they act on the basis of simulations and precious little else.

Tuesday, May 22, 2012

Second Falcon 9/Dragon Launch

Lots of coverage on Parabolic Arc and Nuite Blanche.

Tuesday, April 3, 2012

Highly Replicable Research

So what did we do to make this paper extra super replicable?

If you go to the paper Web site, you'll find:

(Whew, it makes me a little tired just to type all that...)

- a link to the paper itself, in preprint form, stored at the arXiv site;

- a tutorial for running the software on a Linux machine hosted in the Amazon cloud;

- a git repository for the software itself (hosted on github);

- a git repository for the LaTeX paper and analysis scripts (also hosted on github), including an ipython notebook for generating the figures (more about that in my next blog post);

- instructions on how to start up an EC2 cloud instance, install the software and paper pipeline, and build most of the analyses and all of the figures from scratch;

- the data necessary to run the pipeline;

- some of the output data discussed in the paper.

What this means is that you can regenerate substantial amounts (but not all) of the data and analyses underlying the paper from scratch, all on your own, on a machine that you can rent for something like 50 cents an hour. (It'll cost you about $4 -- 8 hours of CPU -- to re-run everything, plus some incidental costs for things like downloads.)

I really think it is a neat use of the Amazon elastic compute cloud.

Saturday, March 31, 2012

Mathematical Foundations of V&V Pre-pub NAS Report

Section 2.10 presents a case study for applying VV&UQ methods to climate models. The introductory paragraph of that section reads,

The previous discussion noted that uncertainty is pervasive in models of real-world phenomena, and climate models are no exception. In this case study, the committee is not judging the validity or results of any of the existing climate models, nor is it minimining the successes of climate modeling. The intent is only to discuss how VVUQ methods in these models can be used to improve the reliability of the predictions that they yield and provide a much more complete picture of this crucial scientific arena.

As noted in the front-matter, since this is a pre-print it is still subject to editorial revision.

Friday, March 30, 2012

Empirical Imperatives

There is a belief that you can simply read imperatives from ‘the evidence’, and to organise society accordingly, as if instructed by mother nature herself. And worse still, there is reluctance on behalf of many engaged in the debate to recognise that this very technocratic, naturalistic and bureaucratic way of looking at the world reflects very much a broader tendency in contemporary politics. To point any of these problems out is to ‘deny the science’. ‘Science’, then, is a gun to the head.Shrinking the Sceptics

Sunday, February 26, 2012

SpaceX Dragon Panorama

|

| SpaceX Dragon capsule, internal iso/orthogrid panels and grid stiffened structure, forward/port view |

Sunday, February 12, 2012

Sears--Haack Body for Mini-Estes

We have a little company here in Dayton that does print on demand (Fabbr) with Makerbots. They specialize in printing RepRap kits, but I think I'm going to see if they can print me a little rocket to use with Estes mini-motors.

We have a little company here in Dayton that does print on demand (Fabbr) with Makerbots. They specialize in printing RepRap kits, but I think I'm going to see if they can print me a little rocket to use with Estes mini-motors. The 1/4 and 1/2 A motors are 13 mm in diameter and 44 mm long.

The first thing you need to print a part with these hobby printers is an stl file. I followed a some-what torturous route to generating one.

First I made a little python script to find the minimum volume Sears-Haack body that would fit a 13x44 mm cylinder. The bold black curve is the minimum volume body; it happens to have a length of twice the motor length.

As you can see in the script, I also dumped an svg file of that curve. This is easily imported into Blender. Then the svg curve must be converted into a Mesh, and the

Spin method applied to generate the body of revolution. I played with the number of steps to get a mesh that looked like it had surface faces with near unit aspect ratio (not that it really matters, but old habits die hard).

Now I should be able to add some fins and export an stl from Blender for my rapid prototyping friends to play with. The design goal for this rocket will be to have positive static margin with the motor in the rocket, but neutral or negative static margin once the ejection charge pops it out the back (that way it does a tumble recovery).

Saturday, February 11, 2012

OpenFoam Now with Fedora RPMs

Thursday, January 19, 2012

McCain's Hangar Queen on Trend

|

| Entire Defense budget to buy one airplane |

Tuesday, January 3, 2012

Nuclear Politics Prior

One of the recurring themes of Climate Resistance (which I've mentioned approvingly before) is that the politics around the solutions proposed for climate change are prior to any consideration of the science of the environment (or the more interesting question Lorenz asked about the existence and uniqueness of long-time averages of the earth's weather).

This state of politics prior is unavoidable, and therefore not unique to the field of climate policy. I think this talk by Kirk Sorensen on the history of US breeder reactor development provides another good example.

See this extensive remix for lots of info on LFTR.

"There's been a very bipartisan approach to scaring the public." Kirk Sorensen (~1:20 or so)

"The whole aim of practical politics is to keep the populace alarmed (and hence clamorous to be led to safety) by menacing it with an endless series of hobgoblins, all of them imaginary." H.L. Mencken

Monday, January 2, 2012

Setting up a project on Github

I've been an SVN user for a while, but it seems like more and more projects are going distributed version control systems like Git so I wanted to learn how to use Git. I found this crash course on Git for SVN users which provides a useful Rosetta stone, and this warning:

SVN is based on an older version control system called CVS, and its designers followed a simple rule: when in doubt, do like CVS. Git also takes a form of inspiration from CVS, and its designer also followed a simple rule: when in doubt, do exactly the opposite of CVS. This approach lead to many technical innovations, but also lead to a lot of extra headscratching among migrators. You have been warned.This sounds a lot link Linus Torvalds' talk, WWCVSND: What Would CVS Not Do?

Github has a set of steps for setting up on linux. Git comes in the Fedora repos (and probably every other repo), so install is easy. A nice bit of documentation that comes with the install is Everyday GIT With 20 Commands Or So.

Since I already use password-less ssh to hop between the boxes in my little network, I didn't move my old public key as in the instructions. I created a config file in the .ssh directory containing these lines:

Host github.com

Where

User git

Port 22

Hostname github.com

IdentityFile ~/.ssh/id_rsa_git

TCPKeepAlive yes

IdentitiesOnly yes

id_rsa_git.pub is the key I uploaded to github. Authenticating to github is then just:

ssh -T git@github.com

Then you accept their RSA key like you would for doing any other ssh login.

The next thing is to create a repo. Clicking through the instructions brings you to a page with several "next steps". Which for my example are:

mkdir FalknerSkan

Which gives some output ending in something like:

cd FalknerSkan

git init

touch README

git add README

git commit -m 'first commit'

git remote add origin git@github.com:jstults/FalknerSkan.git

git push -u origin master

Branch master set up to track remote branch master from origin.

If you'd like, you can read up on the Falkner-Skan ODE at the viscous aero course on MIT's OCW:

Sunday, January 1, 2012

Now you have N problems

(10:40) It's not that regulators don't understand information technology, because it should be possible to be a non-expert and still make a good law. MPs and Congressmen and so on are elected to represent districts and people, not disciplines and issues.

That couple of sentences reminded me of a recent post on single-issue advocacy by Roger Pielke Jr. So, in that spirit, here's a fun word game: How applicable is Doctorow's criticism if you substitute "climate" for "copyright" below?

(22:20) But the reality is, copyright legislation gets as far as it does precisely because it's not taken seriously, which is why on one hand, Canada has had Parliament after Parliament introduce one stupid copyright bill after another, but on the other hand, Parliament after Parliament has failed to actually vote on the bill.

[...]

It's why the World Intellectual Property Organization is gulled time and again into enacting crazed, pig-ignorant copyright proposals because when the nations of the world send their U.N. missions to Geneva, they send water experts, not copyright experts; they send health experts, not copyright experts; they send agriculture experts, not copyright experts, because copyright is just not important to pretty much everyone!

Canada's Parliament didn't vote on its copyright bills because, of all the things that Canada needs to do, fixing copyright ranks well below health emergencies on first nations reservations, exploiting the oil patch in Alberta, interceding in sectarian resentments among French- and English-speakers, solving resources crises in the nation's fisheries, and thousand other issues! The triviality of copyright tells you that when other sectors of the economy start to evince concerns about the internet and the PC, that copyright will be revealed for a minor skirmish, and not a war. Why would other sectors nurse grudges against computers? Well, because the world we live in today is /made/ of computers. We don't have cars anymore, we have computers we ride in; we don't have airplanes anymore, we have flying Solaris boxes with a big bucketful of SCADA controllers [laughter]; a 3D printer is not a device, it's a peripheral, and it only works connected to a computer; a radio is no longer a crystal, it's a general-purpose computer with a fast ADC and a fast DAC and some software.