Thursday, March 25, 2010

Ohio Personal Income

Interesting how DC is such an outlier in these graphs; it's good to be king.

Here's one that's just interesting, not necessarily Dayton, Ohio-centric (you can drag the labels around if it starts out too cluttered):

Rumors of the death of US manufacturing seem greatly exaggerated.

Wednesday, March 17, 2010

Zen Uncertainty

Zen Uncertainty: Attempts to understand uncertainty are mere illusions; there is only suffering.Should we give up? No, there's plenty we can do to make the suffering more bearable. Lo and Mueller give an uncertainty taxonomy of five levels in their 'Physics Envy' paper:

-- WARNING: Physics Envy May Be Hazardous To Your Wealth!

- Complete Certainty: the idealized deterministic world

- Risk without Uncertainty: an honest casino

- Fully Reducible Uncertainty: the odds in the honest casino are not posted, we have to learn them from limited experience

- Partially Reducible Uncertainty: we're not quite sure which game at the casino we're playing so we have to learn that as well as the odds based on limited experience

- Irreducible Uncertainty: we're not even sure if we're in the casino, we might be outside splashing around in the fountain...

Section 2 of the paper provides a nice historical overview of the early work of Paul A. Samuelson, who single-handedly brought statistical mechanics to the economists, and they have never been the same since. Samuelson acknowledged the deep connection between his work and physics:

Perhaps most relevant of all for the genesis of Foundations, Edwin Bidwell Wil- son (1879–1964) was at Harvard. Wilson was the great Willard Gibbs’s last (and, essentially only) protege at Yale. He was a mathematician, a mathematical physicist, a mathematical statistician, a mathematical economist, a polymath who had done first-class work in many fields of the natural and social sciences. I was perhaps his only disciple . . . I was vaccinated early to understand that economics and physics could share the same formal mathematical theorems (Euler’s theorem on homogeneous functions, Weierstrass’s theorems on constrained maxima, Jacobi determinant identities underlying Le Chatelier reactions, etc.), while still not resting on the same empirical foundations and certainties.Related to this theme, there's an interesting recent article over on Mobjectivist site about using ideas from physics to model income distributions.

Lo and Mueller propose to operationalize their uncertainty taxonomy with a 2-D checklist (table). The levels provide the columns across the top, and there is a row for each business component of the activity being evaluated, here's their description:

The idea of an uncertainty checklist is straightforward: it is organized as a table whose columns correspond to the five levels of uncertainty of Section 3, and whose rows correspond to all the business components of the activity under consideration. Each entry consists of all aspects of that business component falling into the particular level of uncertainty, and ideally, the individuals and policies responsible for addressing their proper execution and potential failings.This seems like an idea that could be adapted and combined with best practices for model validation (and checklist sorts of approaches) in helping to define what sorts of uncertainties we are operating under when we make decisions using science-based decision support products.

Their final paragraph echos Lindzen's sentiments about climate science:

While physicists have historically been inspired by mathematical elegance and driven by pure logic, they also rely on the ongoing dialogue between theoretical ideals and experimental evidence. This rational, incremental, and sometimes painstaking debate between idealized quantitative models and harsh empirical realities has led to many breakthroughs in physics, and provides a clear guide for the role and limitations of quantitative methods in financial markets, and the future of finance.

-- WARNING: Physics Envy May Be Hazardous To Your Wealth!

Saturday, March 13, 2010

The Social Ethic and Appeals for Technocracy

If we don't revisit the notion of collective responsibility and sobriety soon, our descendants will pay a heavy price.

-- Michael Tobis

What we lack is an ethical framework for inter-generational responsibilities (such as “pass on a habitable planet to our children”). Cost-benefit analysis avoids these ethical questions, at a time when we desperately need to address them.and claims of the failure of democracy or public discourse on the other,

-- Steve Easterbrook

They have to see the need for pain, to sense the danger of doing nothing. They have to lead their leaders as well as follow – once they switch off, nothing good happens easily, if at all.

Wanted: an eco prophet

Perhaps we have to accept that there is no simple solution to public disbelief in science. The battle over climate change suggests that the more clearly you spell the problem out, the more you turn people away. If they don’t want to know, nothing and no one will reach them.Setting aside for now the unsound conflation of scientific insight with political consensus, the sentiment at the base of this meme is just as troubling. It is basically an argument that our old ethical theories and extant systems of governance are incapable of solving the problems, real or perceived, facing modern civilization. W.H. Whyte already wrote the response to this line of thought more ably than I ever could (though his target was mainly the rise of bureaucracy in business, and associated societal changes, his critique seems topical in this case as well).

-- George Monbiot

My charge against the Social Ethic, then, is on precisely the grounds of contemporary usefulness it so venerates. It is not, I submit, suited to the needs of "modern man," but is instead reinforcing precisely that which least needs to be emphasized, and at the expense of that which does. Here is my bill of particulars

It is redundant. In some societies individualism has been carried to such extremes as to endanger the society itself, and there exist today examples of individualism corrupted into a narrow egoism which prevents effective co-operation. This is a danger, there is no question of that. But is it today as pressing a danger as the oberse -- a climate which inhibits individual initiative and imagination, and the courage to exercise it against group opinion? Society is itself an education in the extrovert values, and I think it can be rightfully argued that rarely has there been a society which has preached them so hard. No man is an island unto himself, but how John Donne would writhe to hear how often and for what reasons, the thought is so tiresomely repeated.

It is premature. To preah technique before content, the skills of getting along isolated from why and to what end the getting along is for, does not produce maturity. It produces a sort of permanent prematurity, and this is true not only of the child being taught life adjustment but of the organization man being taught well-roundedness. This is a sterile concept, and those who believe that they have mastered human relations can blind themselves to the true bases of co-operation. People don't co-operate just to co-operate; they co-operate for substantive reasons, to achieve certain goals, and unless these are comprehended the little manipulations for morale, team spirit, and such are fruitless.

And they can be worse than fruitless. Held up as the end-all of organization leadership, the skills of human relations easily tempt the new administrator into the practice of a tyranny more subtle and more pervasive than that which he means to supplant. No one wants to see the old authoritarian return, but at least it could be said of him that what he wanted primarily from you was your sweat. The new man wants your soul.

It is delusory. It is easy to fight obvious tyranny; it is not easy to fight benevolence, and few things are more calculated to rob the individual of his defenses than the idea that his interests and those of society can be wholly compatible. The good society is the one in which they are most compatible, but they can never be completely so, and one who lets The Organization be the judge ultimately sacrifices himself. Like the good society, the good organization encourages individual expression, and many have done so. But there always remains some conflict between individual and The Organization. Is The Organization to be the arbiter? The Organization will look to its own interests, but it will look to the individual's only as The Organization interprets them.

It is static. Organization of itself has no dynamic. The dynamic is in the individual and thus he must not only question how The Organization interprets his interests, he must question how it interprets its own. The bold new plan he feels is necessary, for example. He cannot trust that The Organization will recognize this. Most probably, it will not. It is the nature of a new idea to confound current consensus -- even the mildly new idea. It might be patently in order, but, unfortunately, the group has a vested interest in it miseries as well as its pleasures, and irrational as this may be, many a member of organization life can recall instances where the group clung to known disadvantages rather than risk the anarchies of change.

It is self-destructive. The quest for normalcy, as we have seen in suburbia, is one of the great breeders of neuroses, and the Social Ethic only serves to exacerbate them. What is normalcy? We practice a great mutual deception. Everyone knows that they themselves are different -- that they are shy in company, perhaps, or dislike many things most people seem to like -- but they are not sure that other people are different too. Like the norms of personality testing, they see about them the sum of efforts of people like themselves to seem as normal as others and possibly a little more so. It is hard enough to learn to live with our inadequacies, and we need not make ourselves more miserable by a spurious ideal of middle-class adjustment. Adjustment to what? Nobody really knows -- and the tragedy is that they don't realize that the so-confident-seeming other people don't know either.

[...]

Science and technology do not have to be antithetical to individualism. To hold that they must be antithetical, as many European intellectuals do, is a sort of utopianism in reverse. For a century Europeans projected their dreams into America; now they are projecting their fears, and in so doing they are falling into the very trap they accuse us of. Attributing a power to the machine that we have never felt, they speak of it almost as if it were animistic and had a will of its own over and above the control of man. Thus they see our failures as inevitable, and those few who are consistent enough to pursue the logic of their charge imply that there is no hope to be found except through a retreat to the past.

This is a hopelessly pessimistic view.

The Organization Man

Whyte goes on to dismiss the nostalgic and naive caricature of individualism bandied about by the right, but rather calls for a pragmatic recognition of the natural tension between the individual and society, and that "[t]he central ideal -- that the individual, rather than society, must be the paramount end [...] is as vital and as applicable today as ever", impending climate catastrophes notwithstanding.

Wednesday, March 10, 2010

Parameterization, Calibration and Validation

Suppose you need to us an empirical closure for, say, the viscosity of your fluid or the equation of state. Usually you develop this sort of thing with some physical insight based on kinetic theory and lab tests of various types to get fits over a useful range of temperatures and pressures, then you use this relation in your code (generally without modification based on the code’s output). An alternative way to approach this closure problem would be to run your code with variations in viscosity models and parameter values and pick the set that gave you outputs that were in good agreement with high-entropy functionals (like an average solution state, there’s many ways to get the same answer, and nothing to choose between them) for a particular set of flows, this would be a sort of inverse modeling approach. Either way gives you an answer that can demonstrate consistency with your data, but there’s probably a big difference in the predictive capability between the models so developed.that is a surprisingly accurate description of the process actually used to tune parameters in climate general circulation models (GCMs).

Here's a relevant section from an overview paper [pdf]:

The CAPT premise is that, as long as the dynami- cal state of the forecast remains close to that of the verifying analyses, the systematic forecast errors are predominantly due to deficiencies in the model parameterizations. [...] In themselves, these differences do not automatically determine a needed parameterization change, but they can provide developers with insights as to how this might be done. Then if changing the parameterization is able to render a closer match between parameterized variables and the evaluation data, and if this change also reduces the systematic forecast errors or any compensating errors that are exposed, the modified parameterization can be regarded as more physically realistic than its predecessor.The highlighted conclusion is the troublesome leap. The process is an essentially post-hoc procedure based on goodness of fit rather than physical insight. This is contrary to established best practice in developing simulations with credible predictive capability. Sound physics rather than extensive empirical tuning is paramount if we're to have confidence in predictions. The paper also provides some discussion that goes to the IVP/BVP distinction:

But will the CAPT methodology enhance the performance of the GCM in climate simulations? In principle, yes: modified parameterizations that reduce systematic forecast errors should also improve the simulation of climate statistics, which are just aggregations of the detailed evolution of the model [see my comment here]. [...] Some systematic climate errors develop more slowly, however. [...] It follows that slow climate errors such as these are not as readily amenable to examination by a forecast-based approach.Closing the loop on long-term predictions is tough (this point is often made in the literature, but rarely mentioned in the press). The paper continues:

Thus, once parameterization improvements are provisionally indicated by better short-range forecasts, enhancements in model performance also must be demonstrated in progressively longer (extended-range, seasonal, interannual, decadal, etc.) simulations. GCM parameterizations that are improved at short time scales also may require some further "tuning" of free parameters in order to achieve radiative balance in climate mode.The discussion of data assimilation, initialization and transfer between different grid resolutions that follows that section is interesting and worth a read. They do address one of the concerns I brought up in my discussion with Robert, which was model comparison based on high-configurational-entropy functionals (like a globally averaged state):

If a modified parameterization is able to reduce systematic forecast errors (defined relative to high-duality observations and NWP analyses), it then can be regarded as more physically realistic than its predecessor.Please don't misunderstand my criticism, the process described is a useful part of the model diagnostic toolbox. However, it can easily fool us into overconfidence in the simulation's predictive capability, because we may mistake what is essentially an extensive and continuous model calibration process for validation.

Tuesday, March 9, 2010

Devil Take the Hindmost

The local Chemineer boys are striking, funny comments from DDN:

The city of Atlanta and the State of Georgia would happily welcome all of the manufacturing facilities and corporate offices of Chemineer into our productive, low tax and right to work state.Capital always wins out, it's nearly as fluid and mobile as ideas. The Boeing machinists thought they could hold the company management over the barrel because of the big new 787 development, and management opened a second line in South Carolina (rather than bring outsourced sub-assemblies 'back home' to Seattle). What makes Chemineer's Dayton operation any better than an equivalent facility and folks down south of the Mason-Dixon?

Why worry with the burdens of constrictive Union contracts and astronomical wages? Property taxes and operating expenses are much lower down here and we'd welcome you with open arms.

NCR has already made the switch - you can too! Say good-bye to unions and hello to sunny Atlanta!

ComeOnDown 9:45 AM, 3/9/2010

Monday, March 8, 2010

Energy Use Per Capita

Saturday, March 6, 2010

Uncertain Rate in FFT-based Oil Extraction Model

This is an extension to the FFT-based oil extraction model (see the Mobjectivist blog for more more details). The basic approach remains the same, each phase of the process is modeled by a convolution of the rate in the previous phase with an exponential decay. Now we are going to apply some of the ideas I presented in Uncertainty Quantification with Stochastic Collocation.

Here is the original Python function which applied the convolutions using an FFT,

def exponential_convolution(x, y, r):

"""Convolve␣an␣exponential␣function␣e^(-r*x)␣with␣y,␣uses␣FFT."""

expo = sp.exp(-r*x)

expo = expo / sp.sum(expo) # normalize

return(ifft(fft(expo) * fft(y)).real)

and here is the function modified to use the complex step derivative calculation method:

def expo_conv_csd(x, y, r):

"""Convolve␣an␣exponential␣function␣e^(-r*x)␣with␣y,␣uses␣FFT.␣Use

␣␣␣␣the␣complex␣step␣derivative␣method␣to␣estimate␣the␣slope␣with

␣␣␣␣respect␣to␣the␣rate.

␣␣␣␣See␣Cervino␣and␣Bewley,␣’On␣the␣extension␣of␣the␣complex-step

␣␣␣␣derivative␣technique␣to␣pseudospectral␣algorithms’,

␣␣␣␣J.Comp.Phys.␣187␣(2003).

␣␣␣␣"""

expo = sp.exp(-r*x)

# normalize:

expo = expo / sp.sum(expo)

# need to transform the real and imag parts seperately to avoid

# problems due to subtractive cancellation:

expo.real = ifft(fft(expo.real) * fft(y)).real

expo.imag = ifft(fft(expo.imag) * fft(y)).real

return(expo)

Notice that we need to transform the real and imaginary parts of our solution separately so that the nice subtractive-cancellation-avoidance properties of the method are retained (see [1] for a more detailed discussion).

Now we have the result of the convolution in the real part of the return value and the sensitivity to changes in rate in the imaginary part (see Figure 1).

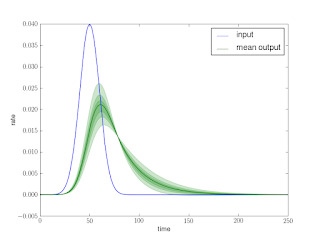

Now we proceed as shown before in the UQ post, except this time we have a separate probability density for the result at each time. Figure 2 shows the mean prediction as well as shading based on confidence intervals (at the 0.3, 0.6, and 0.9 level). The Python to generate this figure is shown below, it uses the alpha parameter (transparency) available in the matplotlib plotting package in a similar way to the vizualization approach shown in this post.

p.figure()

p.plot(x, y, label="input")

p.plot(x, y_mean, ’g’, label="mean␣output")

p.fill_between(x, y_ci30[0], y_ci30[1], where=None, color=’g’, alpha=0.2)

p.fill_between(x, y_ci60[0], y_ci60[1], where=None, color=’g’, alpha=0.2)

p.fill_between(x, y_ci90[0], y_ci90[1], where=None, color=’g’, alpha=0.2)

p.legend(loc=0)

p.xlabel("time")

p.ylabel("rate")

p.savefig("uncertain_rate.png")

Compare the result in Figure 2 with this Monte Carlo analysis. It’s not quite apples-to-apples, because that Monte Carlo includes uncertain discovery (the input) as well. Also, this result is just a first-order collocation, so it assumes that the rate sensitivity is constant with variations in the result. This is not actually the case, and you can see if you look closely that the 0.9-interval actually dips into negative territory, which is unphysical. This is a good example of the sort of common-sense checking Hamming suggested in his “N+1” essay as a requirement for any successful computation: Are the known conservation laws obeyed by the result? [2]. Clearly we are not going to “overshoot” on extraction and begin pumping oil back into the ground.

On a somewhat related note, Hamming had another insightful thing to say about the importance of checking the correctness of a calculation: It is the experience of the author that a good theoretician can account for almost anything produced, right or wrong, or at least he can waste a lot of time worrying about whether it is right or wrong [2]. Don’t worry,verify!

References

[1] Cervino, L.I., Bewley, T.R., “On the extension of the complex-step derivative technique to pseudospectral algorithms,” Journal of Computational Physics, 187, 544-549, 2003.

[2] Hamming, R.W., “Numerical Methods for Scientists and Engineers,” 2nd ed., Dover Publications, 1986.

(If you are serious about the art of scientific computing, that Dover edition of Hamming’s book is the best investment you could make with a very few bucks.)