Motivation and Background

Yet another installment in the Lorenz63 series. This time motivated by a commenter on Climate Etc. Tomas Milanovic claims that time averages are chaotic too in response to the oft repeated claim that the predictability limitations of nonlinear dynamical systems are not a problem in the case of climate prediction. Lorenz would seem to agree, “most climatic elements, and certainly climatic means, are not predictable in the first sense at infinite range, since a non-periodic series cannot be made periodic through averaging [1].” We’re not going to just take his word on it. We’ll see if we can demonstrate this with our toy model.

That’s the motivation, but before we get to toy model results a little background discussion is in order. In this previous entry I illustrated the different types of functionals that you might be interested in depending on whether you are doing weather prediction or climate prediction. I also made the remark, “A climate prediction is trying to provide a predictive distribution of a time-averaged atmospheric state which is (hopefully) independent of time far enough into the future.” It was pointed out to me that this is a testable hypothesis [2], and that the empirical evidence doesn’t seem to support the existence of time-averages (or other functionals) describing the Earth’s climate system that are independent of time [3]. In fact, the above assumption was critiqued by none other than Lorenz in 1968 [4]. In that paper he states,

Questions concerning the existence and uniqueness of long-term statistics fall into the realm of ergodic theory. [...] In the case of nonlinear equations, the uniqueness of long-term statistics is not assured. From the way in which the problem is formulated, the system of equations, expressed in deterministic form, together with a specified set of initial conditions, determines a time-dependent solution extending indefinitely into the future, and therefore determines a set of long-term statistics. The question remains as to whether such statistics are independent of the choice of initial conditions.

He goes on to define a system as transitive if the long-term statistics are independent of initial condition, and intransitive if there are “two or more sets of long-term statistics, each of which has a greater-than-zero probability of resulting from randomly chosen initial conditions.” Since the concept of climate change has no meaning for statistics over infinitely long intervals, he then defines a system as almost intransitive if the statistics at infinity are unique, but the statistics over finite intervals depend (perhaps even sensitively) on initial conditions. In the context of policy relevance we are generally interested in behavior over finite time-intervals.

In fact, from what I’ve been able to find, different large-scale spatial averages (or coherent structures, which you could track by suitable projections or filtering) of state for the climate system face similar limits to predictability as un-averaged states. The predictability just decays at a slower rate. So instead of predictive limitations for weather-like functionals on the order of a few weeks, the more climate-like functionals become unpredictable on slower time-scales. There’s no magic here, things don’t suddenly become predictable a couple decades or a century hence because you take an average. It’s just that averaging or filtering may change the rate that errors for that functional grow (because in spatio-temporal chaos different structures, or state vectors, will have different error growth rates and reach saturation at different times). Again Lorenz puts it well, “the theory which assures us of ultimate decay of atmospheric predictability says nothing about the rate of decay” [1]. Recent work shows that initialization matters for decadal prediction, and that the predictability of various functionals decay at different rates [5]. For instance, sea surface temperature anomalies are predictable at longer forecast horizons than surface temperatures over land. Hind-casts of large spatial averages on decadal time-scales have shown skill in the last two decades of the past century (though they had trouble beating a persistence forecast for much of the rest of the century) [6].

I’ve noticed in on-line discussions about climate science that some people think that the problem of establishing long term statistics for nonlinear systems is a solved one. That is not the case for the complex, nonlinear systems we are generally most interested in (there are results for our toy though [7, 8]). I think this snippet sums things up well,

Atmospheric and oceanic forcings are strongest at global equilibrium scales of 107 m and seasons to millennia. Fluid mixing and dissipation occur at micorscales of 10-3 m and 10-3s, and cloud particulate transformations happen at 10-6 m or smaller. Observed intrinsic variability is spectrally broad band across all intermediate scales. A full representation for all dynamical degrees of freedom in different quantities and scales is uncomputable even with optimistically foreseeable computer technology. No fundamentally reliable reduction of the size of the AOS [atmospheric oceanic simulation] dynamical system (i.e., a statistical mechanics analogous to the transition between molecular kinetics and fluid dynamics) is yet envisioned. [9]

Here McWilliams is making a point similar to that made by Lorenz in [4] about establishing a statistical mechanics for climate. This would be great if it happened, because that would mean that the problem of turbulence would be solved for us engineers too. Right now the best we have (engineers interested in turbulent flows and climate scientists too) is empirically adequate models that are calibrated to work well in specific corners of reality.

Lorenz was responsible for another useful concept concerning predictability, that is predictability of the first and second kind [1]. If you care about the time-accurate evolution of the order of states then you are interested in predictability of the first kind. If, however, you do not care about the order, but only the statistics, then you are concerned with predictability of the second kind. Unfortunately, Lorenz’s concepts of first and second kind predictability have been morphed in to a claim that first kind predictability is about solving initial value problem (IVP)s and second kind predictability is about solving boundary value problem (BVP)s. For example, “Predictability of the second kind focuses on the boundary value problem: how predictable changes in the boundary conditions that affect climate can provide predictive power [5].” This is unsound. If you read Lorenz closely, you’ll see that the important open question he was exploring about whether the climate is transitive, intransitive or almost intransitive has been assumed away by the spurious association of kinds of predictability with kinds of problems [1]. Lorenz never made this mistake, he was always clear that the difference in kinds of predictability depends on the functionals you are interested in, not whether it is appropriate to solve an IVP or a BVP (what reason could you have for expecting meaningful frequency statistics from a solution to a BVP?). Those considerations depend on the sort of system you have. In an intransitive or almost intransitive system even climate-like functionals depend on the initial conditions.

A good early paper on applying information theory concepts to climate predictability is by Leung and North [10], and there is a more recent review article that covers the basic concepts by DelSole and Tippett [11].

Recurrence Plots

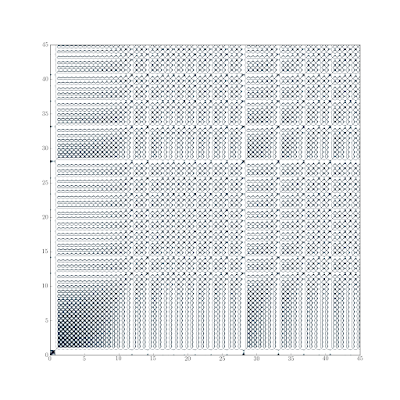

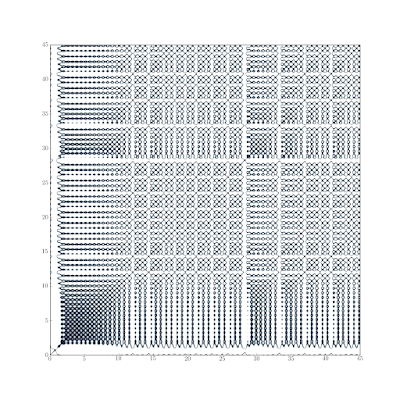

Recurrence plots are useful for getting a quick qualitative feel for the type of response exhibited by a time-series [12, 13]. First we run a little initial condition (IC) ensemble with our toy model. The computer experiment we’ll run to explore this question will consist of perturbations to the initial conditions (I chose the size of the perturbation so the ensemble would blow-up around t = 12). Rather than sampling from a distribution for the members of the ensemble, I chose them according a stochastic collocation (this helps in getting the same results every time too).

One thing that these two plots makes clear is that it doesn’t make much sense to compare individual trajectories with the ensemble mean. The mean is a parameter of a distribution describing a population of which the trajectories are members. While the trajectories are all orbits on the attractor, the mean is not.

Comparing the chaotic recurrence plots with the plots below of a periodic series and a stochastic series illustrates the qualitative differences in appearance.

Clearly, both the ensemble mean and the individual trajectory are chaotic series, sort of “between” periodic and stochastic in their appearance. Ensemble averaging doesn’t make our chaotic series non-chaotic, what about time averaging?

Predictability Decay

How does averaging affect the decay of predictability for the state of the Lorenz63 system, and can we measure this effect? We can track how the predictability of the future state decays given knowledge of the initial state by using the relative entropy. There are other choices for measures such as mutual information [10]. Since we’ve already got our ensemble though, we can just use entropy like we did before. Rather than just a simple moving average, I’ll be calculating an exponentially weighted one using an FFT-based approach, of course (there’s some edge effects we’d need to worry about if this were a serious analysis, but we’ll ignore that for now). The entropy for the ensemble is shown for three different smoothing levels in Figure 4 (the high entropy prior to t = 5 for the smoothed series is spurious because I didn’t pad the series and it’s calculated with the FFT).

While smoothing does lower the entropy of the ensemble (lower entropy for more smoothing / smaller λ), it still experiences the same sort of “blow-up” as the unsmoothed trajectory. This indicates problems for predictability even for our time-averaged functionals. Guess what? The recurrence plot indicates that our smoothed trajectory is still chaotic!

This result shouldn't be too surprising, moving averages or smoothing (of whatever type you fancy) are linear operations. It would probably take a pretty clever nonlinear transformation to turn a chaotic series into a non-chaotic one (think about how the series in this case is generated in the first place). I wouldn't expect any combination of linear transformations to accomplish that.

Conclusions

I’ll begin the end with another great point from McWilliams (though I’ve not heard of sub-grid fluctuations referred to as “computational noise,” that term makes me think of round-off error) that should serve to temper our demands of predictive capability from climate models[9]:

Among their other roles, parametrizations regularize the solutions on the grid scale by limiting fine-scale variance (also known as computational noise). This practice makes the choices of discrete algorithms quite influential on the results, and it removes the simulation from the mathematically preferable realm of asymptotic convergence with resolution, in which the results are independent of resolution and all well conceived algorithms yield the same answer.

If I had read this earlier, I wouldn’t have spent so much time searching for something that doesn’t exist.

Regardless of my tortured learning process, what do the toy models tell us? Our ability to predict the future is fundamentally limited. Not really an earth-shattering discovery; it seems a whole lot like common sense. Does this have any implication for how we make decisions? I think it does. Our choices should be robust with respect to these inescapable limitations. In engineering we look for broad optimums that are insensitive to design or requirements uncertainties. The same sort of design thinking applies to strategic decision making or policy design. The fundamental truism for us to remember in trying to make good decisions under the uncertainty caused by practical and theoretical constraints is that limits on predictability do not imply impotence.

References

[1] Lorenz, E. N., The Physical Basis of Climate and Climate Modeling, Vol. 16 of GARP publication series, chap. Climatic Predictability, World Meteorological Organization, 1975, pp. 132–136.

[2] Pielke Sr, R. A., “your query,” September 2010, electronic mail to the author.

[3] Rial, J. A., Pielke Sr, R. A., Beniston, M., Claussen, M., Canadell, J., Cox, P., Held, H., Noblet-Ducoudr, N. D., Prinn, R., Reynolds, J. F., and Salas, J. D., “Nonlinearities, Feedbacks And Critical Thresholds Within The EarthS Climate System,” Climatic Change, Vol. 65, No. 1-2, 2004, pp. 11–38.

[4] Lorenz, E. N., “Climatic Determinism,” Meteorological Monographs, Vol. 8, No. 30, 1968.

[5] Collins, M. and Allen, M. R., “Assessing The Relative Roles Of Initial And Boundary Conditions In Interannual To Decadal Climate Predictability,” Journal ofClimate, Vol. 15, No. 21, 2002, pp. 3104–3109.

[6] Lee, T. C., Zwiers, F. W., Zhang, X., and Tsao, M., “Evidence of Decadal Climate Prediction Skill Resulting from Changes in Anthropogenic Forcing,” Journal of Climate, Vol. 19, 2006.

[7] Tucker, W., The Lorenz Attractor Exists, Ph.D. thesis, Uppsala University, 1998.

[8] Kehlet, B. and Logg, A., “Long-Time Computability of the Lorenz System,”http://lorenzsystem.net/.

[9] McWilliams, J. C., “Irreducible Imprecision In Atmospheric And Oceanic Simulations,” Vol. 104 of National Academy of Sciences, National Academy of Sciences, pp. 8709 – 8713.

[10] Leung, L.-Y. and North, G. R., “Information Theory and Climate Prediction,”Journal of Climate, Vol. 3, 1990, pp. 5–14.

[11] DelSole, T. and Tippett, M. K., “Predictability: Recent insights from information theory,” Reviews of Geophysics, Vol. 45, 2007.

[12] Eckmann, J.-P., Kamphorst, S. O., and Ruelle, D., “Recurrence Plots of Dynamical Systems,” EPL (Europhysics Letters), Vol. 4, No. 9, 1987, pp. 973.

[13] Marwan, N., “A historical review of recurrence plots,” The European PhysicalJournal - Special Topics, Vol. 164, 2008, pp. 3–12, 10.1140/epjst/e2008-00829-1.

“small random variations in solar input (not to mention butterflies)” [as what makes weather random over the long term]

Chaos as you have discussed it requires fixed control parameters (absolutely constant solar input) and no external sources of variation not accounted for in the equations (no butterflies). You gave zero attention in your supposed response to my comment to this central issue. Others here have been accused of being non-responsive, but I have to say that is pretty non-responsive on your part.

The fact is as soon as there is any external perturbation of a chaotic system not accounted for in the dynamical equations, you have bumped the system from one path in phase space to another. Earth’s climate is continually getting bumped by external perturbations small and large. The effect of these is to move the actual observed trajectory of the system randomly – yes randomly – among the different possible states available for given energy/control parameters etc.

The randomness comes not from the chaos, but from external perturbation. Chaos amplifies the randomness so that at a time sufficiently far in the future after even the smallest perturbation, the actual state of the system is randomly sampled from those available. That random sampling means it has real statistics. The “states available” are constrained by boundaries – solar input, surface topography, etc. which makes the climate problem – the problem of the statistics of weather – a boundary value problem (BVP). There are many techniques for studying BVP’s – one of which is simply to randomly sample the states using as physical a model as possible to get the right statistics. That’s what most climate models do. That doesn’t mean it’s not a BVP.

Tomas’ comments about the 3-body system being not even “predictable statistically (e.g you can not put a probability on the event “Mars will be ejected from the solar system in N years”” is true in the strict sense of the exact mathematics assuming no external perturbations. That’s simply because for a deterministic system something will either happen or it won’t, there’s no issue of probability about it at all. But as soon as you add any sort of noise, your perfect chaotic system becomes a mere stochastic one over long time periods, and probabilities really do apply.

A nice review of the relationships between chaos, probability and statistics is this article from 1992:

“Statistics, Probability and Chaos” by L. Mark Berliner, Statist. Sci. Volume 7, Number 1 (1992), 69-90.

http://projecteuclid.org/DPubS?service=UI&version=1.0&verb=Display&handle=euclid.ss/1177011444

and see some of the discussion that followed in that journal (comments linked on that Project Euclid page).

Where’s the meat? Where’s the results for the problems we care about? I can calculate results for logistic maps and Lorenz ’63 on my laptop (and the attractor for that particular toy exists).