This isn't about the normal death spiral of increasing unit costs driving production cuts, which increases unit costs, which drives production cuts, which.... It's about another sort of price spiral caused by the US government's infatuation with sole-sourcing critical capabilities. I think this is largely due to technocrats trusting simple, static industrial-age cost models which support decisions dominated by returns from economies of scale. The basic logic of the decisions these models support (an equilibrium solution) is: "things will be cheaper with one supplier because the overhead will be amortized over bigger quantities."

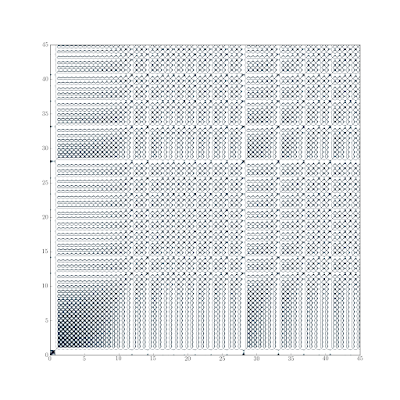

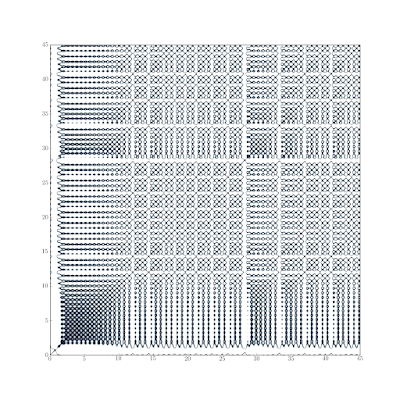

The basic mistake these models make is neglecting the dynamics. Price is a dynamic thing. If the capability is very critical, and there is only one supplier, then there is almost no ceiling on how high the price can rise. The price level reached under those dynamics is just below the point where you'd stop paying for the capability in favor of a more important one (I'll call this the buyer's "level of pain"). The alternative dynamics occurs when there are multiple competing suppliers. The price reached under these dynamics asymptotes towards the economic costs (this takes into account barriers to entry / opportunity costs). Here's a simple graph illustrating price behavior under these two situations.

|

| Price Dynamics |

We see these dynamics play out in a variety of defense acquisitions. The F-35 engine program and the Evolved Expendable Launch Vehicle program are two exemplars that are currently making the news.

The F-35 engine procurement was initially structured to support two suppliers during development, much like the engine programs for F-15 and F-16. There are operational advantages to having two engines. If a problem is found in one model, only half of the Air Force's tactical aircraft would have to be grounded while the solution is found. The other advantage comes from the suppliers competing with each other on price for various lots of engines. A disadvantage is overhead and development cost for the two suppliers and possibly increased logistics footprint for supporting two different engine models.

The recent news is that the budget does not include funds for the second engine. Not a week after this budget is passed which makes the engine buy a sole-source deal, we have an Undersecretary of Defense for Acquisition complaining about the price from the remaining supplier.

"I'm not happy, as I am with so many parts of all our programs, with (the P&W engine's) cost performance so far," Carter told the House of Representatives Appropriations subcommittee on defense on Wednesday. "We need to drive the costs down." [...] "Our analysis does not show the payback," Carter told the subcommittee. He added that "people of good will come to different conclusions on this issue." U.S. "not happy" with F-35 engine cost overrunsDid the analysis include the second supplier offering to assume the risks and go fixed price on the development? Is complaining about the price, being really unhappy about paying it, but paying it anyway because there is no alternative anything but empty political theater? Makes for great content in the trade rags: Acquisition Official gives contractor a stern talking to! Contractor hangs head in a suitably chastened way, "yes, our prices are very high for these unique capabilities, we are working hard to contain the costs for our customer." Much harrumphing is heard from various congresscritters, meanwhile the price continues to spiral higher...

In the case of the EELV, despite the fact that the Air Force paid for two parallel rocket development programs we now have just a single supplier. The two launch service providers were so expensive, they could not compete in the commercial market. The business case for the two rockets hinged on them being able to make money in the commercial market and get their launch rates up. When no other customers but the US government could afford their high prices they had to combine into the single consortium: ULA. So it's sole-source with two vehicles. Recall the various advantages and disadvantages of developing two products discussed above in the case of the F-35 engine. Now EELV has the worst of both worlds: high overhead and logistics costs to support two vehicles, and no competition or customer diversification to get flight rates up and bring prices down.

Launch-service providers agree that their viability, as well as their ability to keep costs down, is based on launch rhythm. The more often a vehicle launches, the more reliable it becomes. Scale economies are introduced as well in a virtuous cycle. One U.S. government official agreed that if SpaceX is now allowed to break ULA’s monopoly on U.S. government satellite launches as indicated by the memorandum of agreement, it could force ULA’s already high prices even higher as it eats into ULA’s current market. “In the longer term we may be faced with questions about whether one of them [ULA or SpaceX] can remain viable without direct subsidies — the same questions we faced with ULA,” this official said. “Then what do we do? We have a policy of assured access to space, which means at least two vehicles. The demand for launches has not increased since ULA was formed, so we could be heading toward a nearly identical situation in a few years. But we are spending taxpayers’ money and if we can find reliable launches that are less expensive, we are not going to ignore that.” SpaceX Receives Boost in Bid To Loft National Security SatellitesIt is interesting to note the thinking of the unnamed government official. He doesn't recognize the dynamics of the situation. He's living in a sole-source mindset, it just happens that he's going to change to this new, lower cost source.

NASA has a pricing model that shows savings from "outsourcing development", but not because of any interesting dynamics that they've included. The justification is the same as those underlying the broken decisions about aircraft engines: "returns from economies of scale". The dynamics of competition remains ignored.

NASA Deputy Administrator Lori Garver, in a separate presentation here April 12, said the agency’s policy of pushing rocket-development work onto the private sector will only reach maximum benefit if other customers also purchase the vehicles developed initially with NASA funding. Referring specifically to SpaceX, Garver said a conventional NASA procurement of a Falcon 9-class rocket would cost nearly $4.5 billion according to a NASA-U.S. Air Force cost model that includes the vehicle’s first flight. Outsourcing development to SpaceX, she said, would cut that figure by 60 percent, but only if other customers purchase the vehicle, thus permitting scale economies to reach maximum effect. After Servicing Space Station SpaceXs Priority is Taking on EELV

The way out of the death spiral is program dependent. In the EELV case it took a new entrant who has signed commercial contracts in addition to chasing the government launches (from a couple different agencies). SpaceX has built in significant customer diversification that ULA never developed (though this was hoped for in the early justifications of the program structure). Why did Lockheed-Martin and Boeing, and subsequently ULA never develop this customer diversification? Because they didn't have to. The government guaranteed their continued existence. SpaceX, on the other hand, has no such guarantee. The only option for their continued existence is to make a profit from more than one customer. In the F-35 engine case, DoD has decided that even the assumption of development risk by the second supplier under a fixed price contract is not enough to close the case. This puzzles me. Maybe the second engine has become a symbol of "duplication and waste" rather than "competition and efficiency". If so, then DoD has given the primary engine supplier a way to "frame" their competition out of existence with political argument (removing the need for them to earn market share honestly).

The outlook for lower cost space access looks good. However, as long as the DoD is stuck in its current frame, there is great opportunity for political theater that will serve mainly as a content generator for defense trade publications and a distraction from the root cause of steadily rising tactical aircraft engine costs into the future.

“small random variations in solar input (not to mention butterflies)” [as what makes weather random over the long term]

Chaos as you have discussed it requires fixed control parameters (absolutely constant solar input) and no external sources of variation not accounted for in the equations (no butterflies). You gave zero attention in your supposed response to my comment to this central issue. Others here have been accused of being non-responsive, but I have to say that is pretty non-responsive on your part.

The fact is as soon as there is any external perturbation of a chaotic system not accounted for in the dynamical equations, you have bumped the system from one path in phase space to another. Earth’s climate is continually getting bumped by external perturbations small and large. The effect of these is to move the actual observed trajectory of the system randomly – yes randomly – among the different possible states available for given energy/control parameters etc.

The randomness comes not from the chaos, but from external perturbation. Chaos amplifies the randomness so that at a time sufficiently far in the future after even the smallest perturbation, the actual state of the system is randomly sampled from those available. That random sampling means it has real statistics. The “states available” are constrained by boundaries – solar input, surface topography, etc. which makes the climate problem – the problem of the statistics of weather – a boundary value problem (BVP). There are many techniques for studying BVP’s – one of which is simply to randomly sample the states using as physical a model as possible to get the right statistics. That’s what most climate models do. That doesn’t mean it’s not a BVP.

Tomas’ comments about the 3-body system being not even “predictable statistically (e.g you can not put a probability on the event “Mars will be ejected from the solar system in N years”” is true in the strict sense of the exact mathematics assuming no external perturbations. That’s simply because for a deterministic system something will either happen or it won’t, there’s no issue of probability about it at all. But as soon as you add any sort of noise, your perfect chaotic system becomes a mere stochastic one over long time periods, and probabilities really do apply.

A nice review of the relationships between chaos, probability and statistics is this article from 1992:

“Statistics, Probability and Chaos” by L. Mark Berliner, Statist. Sci. Volume 7, Number 1 (1992), 69-90.

http://projecteuclid.org/DPubS?service=UI&version=1.0&verb=Display&handle=euclid.ss/1177011444

and see some of the discussion that followed in that journal (comments linked on that Project Euclid page).

Where’s the meat? Where’s the results for the problems we care about? I can calculate results for logistic maps and Lorenz ’63 on my laptop (and the attractor for that particular toy exists).